Python으로 머신러닝 익히기: 기초 및 주요 개념

In today's era of Artificial Intelligence (AI), scaling businesses and streamlining workflows has never been easier or more accessible. AI and machine learning equip companies to make informed decisions, giving them a superpower to predict the future with just a few lines of code. Before taking a significant risk, wouldn't knowing if it's worth it be beneficial? Have you ever wondered how these AIs and machine learning models are trained to make such precise predictions?

In this article, we will explore, hands-on, how to create a machine-learning model that can make predictions from our input data. Join me on this journey as we delve into these principles together.

This is the first part of a series on mastering machine learning, focusing on the foundations and key concepts. In the second part, we will dive deeper into advanced techniques and real-world applications.

Introduction:

Machine Learning (ML) essentially means training a model to solve problems. It involves feeding large amounts of data (input-data) to a model, enabling it to learn and discover patterns from the data. Interestingly, the model's accuracy depends solely on the quantity and quality of data it is fed.

Machine learning extends beyond making predictions for enterprises; it powers innovations like self-driving cars, robotics, and much more. With continuous advancements in ML, there's no telling what incredible achievements lie ahead - it's simply amazing, right?

There's no contest as to why Python remains one of the most sought-after programming languages for machine learning. Its vast libraries, such as Scikit-Learn and Pandas, and its easy-to-read syntax make it ideal for ML tasks. Python offers a simplified and well-structured environment that allows developers to maximize their potential. As an open-source programming language, it benefits from contributions worldwide, making it even more suitable and advantageous for data science and machine learning.

Fundamentals Of Machine Learning

Machine Learning (ML) is a vast and complex field that requires years of continuous learning and practice. While it's impossible to cover everything in this article, let's look into some important fundamentals of machine learning, specifically:

- Supervised Machine Learning From its name, we can deduce that supervised machine learning involves some form of monitoring or structure. It entails mapping one function to another; that is, providing labeled data input (i) to the machine, explaining what should be done (algorithms), and waiting for its output (j). Through this mapping, the machine learns to predict the output (j) whenever an input (i) is fed into it. The result will always remain output (j). Supervised ML can further be classified into:

Regression: When a variable input (i) is supplied as data to train a machine, it produces a continuous numerical output (j). For example, a regression algorithm can be used to predict the price of an item based on its size and other features.

Classification: This algorithm makes predictions based on grouping by determining certain attributes that make up the group. For example, predicting whether a product review is positive, negative, or neutral.

- Unsupervised Machine Learning Unsupervised Machine Learning tackles unlabeled or unmonitored data. Unlike supervised learning, where models are trained on labeled data, unsupervised learning algorithms identify patterns and relationships in data without prior knowledge of the outcomes. For example, grouping customers based on their purchasing behavior.

Setting Up Your Environment

When setting up your environment to create your first model, it's essential to understand some basic steps in ML and familiarize yourself with the libraries and tools we will explore in this article.

Steps in Machine Learning:

- Import the Data: Gather the data you need for your analysis.

- Clean the Data: Ensure your data is in good and complete shape by handling missing values and correcting inconsistencies.

- Split the Data: Divide the data into training and test sets.

- Create a Model: Choose your preferred algorithm to analyze the data and build your model.

- Train the Model: Use the training set to teach your model.

- Make Predictions: Use the test set to make predictions with your trained model.

- Evaluate and Improve: Assess the model's performance and refine it based on the outputs.

Common Libraries and Tools:

NumPy: Known for providing multidimensional arrays, NumPy is fundamental for numerical computations.

Pandas: A data analysis library that offers data frames (two-dimensional data structures similar to Excel spreadsheets) with rows and columns.

Matplotlib: Matplotlib is a two-dimensional plotting library for creating graphs and plots.

Scikit-Learn: The most popular machine learning library, providing all common algorithms like decision trees, neural networks, and more.

Recommended Development Environment:

Standard IDEs such as VS Code or terminals may not be ideal when creating a model due to the difficulty in inspecting data while writing code. For our learning purposes, the recommended environment is Jupyter Notebook, which provides an interactive platform to write and execute code, visualize data, and document the process simultaneously.

Step-by-Step Setup:

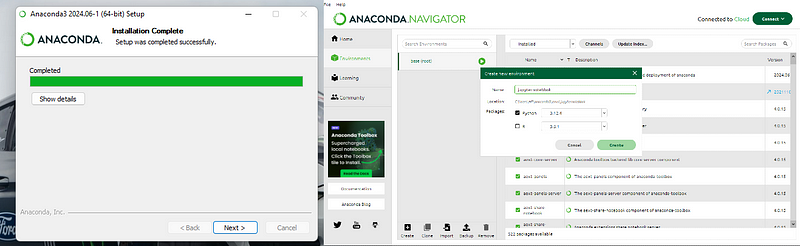

Download Anaconda:

Anaconda is a popular distribution of Python and R for scientific computing and data science. It includes the Jupyter Notebook and other essential tools.

Download Anaconda from this link.

Install Anaconda:

Follow the installation instructions based on your operating system (Windows, macOS, or Linux).

After the installation is complete, you will have access to the Anaconda Navigator, which is a graphical interface for managing your Anaconda packages, environments, and notebooks.

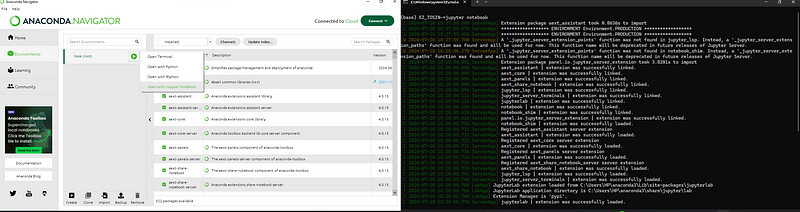

Launching Jupyter Notebook:

Open the Anaconda Navigator

In the Navigator, click on the "Environments" tab.

Select the "base (root)" environment, and then click "Open with Terminal" or "Open Terminal" (the exact wording may vary depending on the OS).

In the terminal window that opens, type the command jupyter notebook and press Enter.

This command will launch the Jupyter Notebook server and automatically open a new tab in your default web browser, displaying the Jupyter Notebook interface.

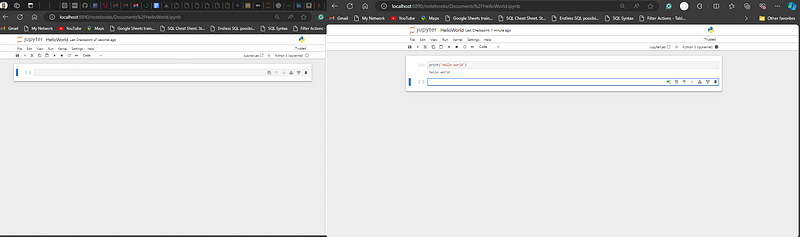

Using Jupyter Notebook:

The browser window will show a file directory where you can navigate to your project folder or create new notebooks.

Click "New" and select "Python 3" (or the appropriate kernel) to create a new Jupyter Notebook.

You can now start writing and executing your code in the cells of the notebook. The interface allows you to document your code, visualize data, and explore datasets interactively.

Building Your First Machine Learning Model

In building your first model, we have to take cognizance of the steps in Machine Learning as discussed earlier, which are:

- Import the Data

- Clean the Data

- Split the Data

- Create a Model

- Train the Model

- Make Predictions

- Evaluate and Improve

Now, let's assume a scenario involving an online bookstore where users sign up and provide their necessary information such as name, age, and gender. Based on their profile, we aim to recommend various books they are likely to buy and build a model that helps boost sales.

First, we need to feed the model with sample data from existing users. The model will learn patterns from this data to make predictions. When a new user signs up, we can tell the model, "Hey, we have a new user with this profile. What kind of book are they likely to be interested in?" The model will then recommend, for instance, a history or a romance novel, and based on that, we can make personalized suggestions to the user.

Let's break down the process step-by-step:

- Import the Data: Load the dataset containing user profiles and their book preferences.

- Clean the Data: Handle missing values, correct inconsistencies, and prepare the data for analysis.

- Split the Data: Divide the dataset into training and testing sets to evaluate the model's performance.

- Create a Model: Choose a suitable machine learning algorithm to build the recommendation model.

- Train the Model: Train the model using the training data to learn the patterns and relationships within the data.

- Make Predictions: Use the trained model to predict book preferences for new users based on their profiles.

- Evaluate and Improve: Assess the model's accuracy using the testing data and refine it to improve its performance.

By following these steps, you will be able to build a machine-learning model that effectively recommends books to users, enhancing their experience and boosting sales for the online bookstore. You can gain access to the datasets used in this tutorial here.

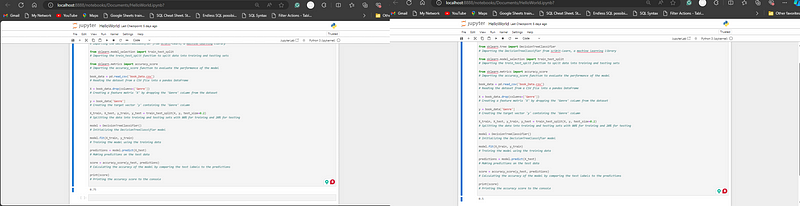

Let's walk through a sample code snippet to illustrate the process of testing the accuracy of the model:

- Import the necessary libraries:

import pandas as pd from sklearn.tree import DecisionTreeClassifier from sklearn.model_selection import train_test_split from sklearn.metrics import accuracy_score

We start by importing the essential libraries. pandas is used for data manipulation and analysis, while DecisionTreeClassifier, train_test_split, and accuracy_score are from Scikit-learn, a popular machine learning library.

- Load the dataset:

book_data = pd.read_csv('book_Data.csv')

Read the dataset from a `CSV file` into a pandas DataFrame.

- Prepare the data:

X = book_data.drop(columns=['Genre']) y = book_data['Genre']

Create a feature matrix X by dropping the 'Genre' column from the dataset and a target vector y containing the 'Genre' column.

- Split the data:

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

Split the data into training and testing sets with 80% for training and 20% for testing.

- Initialize and train the model:

model = DecisionTreeClassifier() model.fit(X_train, y_train)

Initialize the DecisionTreeClassifier model and train it using the training data.

- Make predictions and evaluate the model:

predictions = model.predict(X_test) score = accuracy_score(y_test, predictions) print(score)

Make predictions on the test data and calculate the accuracy of the model by comparing the test labels to the predictions. Finally, print the accuracy score to the console.

In this example, we start by importing the essential libraries. Pandas is used for data manipulation and analysis, while DecisionTreeClassifier, train_test_split, and accuracy_score are from Scikit-learn, a popular machine learning library. We then read the dataset from a CSV file into a pandas DataFrame, prepare the data by creating a feature matrix X and a target vector y, split the data into training and testing sets, initialize and train the DecisionTreeClassifier model, make predictions on the test data, and calculate the accuracy of the model by comparing the test labels to the predictions.

Depending on the data you're using, the results will vary. For instance, in the output below, the accuracy score displayed is 0.7, but it may show 0.5 when the code is run again with a different dataset. The accuracy score will vary, a higher score indicates a more accurate model.

Output:

Data Preprocessing:

Now that you've successfully created your model, it's important to note that the kind of data used to train your model is crucial to the accuracy and reliability of your predictions. In Mastering Data Analysis: Unveiling the Power of Fairness and Bias in Information, I discussed extensively the importance of data cleaning and ensuring data fairness. Depending on what you intend to do with your model, it is essential to consider if your data is fair and free of any bias. Data cleaning is a very vital part of machine learning, ensuring that your model is trained on accurate, unbiased data. Some of these ethical considerations are:

Removing Outliers: Ensure that the data does not contain extreme values that could skew the model's predictions.

Handling Missing Values: Address any missing data points to avoid inaccurate predictions.

Standardizing Data: Make sure the data is in a consistent format, allowing the model to interpret it correctly.

Balancing the Dataset: Ensure that your dataset represents all categories fairly to avoid bias in predictions.

Ensuring Data Fairness: Check for any biases in your data that could lead to unfair predictions and take steps to mitigate them.

By addressing these ethical considerations, you ensure that your model is not only accurate but also fair and reliable, providing meaningful predictions.

Conclusion:

Machine learning is a powerful tool that can transform data into valuable insights and predictions. In this article, we explored the fundamentals of machine learning, focusing on supervised and unsupervised learning, and demonstrated how to set up your environment and build a simple machine learning model using Python and its libraries. By following these steps and experimenting with different algorithms and datasets, you can unlock the potential of machine learning to solve complex problems and make data-driven decisions.

In the next part of this series, we will dive deeper into advanced techniques and real-world applications of machine learning, exploring topics such as feature engineering, model evaluation, and optimization. Stay tuned for more insights and practical examples to enhance your machine-learning journey.

Additional Resources:

Programming with Mosh

Machine Learning Tutorial geeksforgeeks

-

실시간 통신을 위해 Go에서 WebSocket 사용채팅 애플리케이션, 실시간 알림, 협업 도구 등 실시간 업데이트가 필요한 앱을 구축하려면 기존 HTTP보다 더 빠르고 대화형인 통신 방법이 필요합니다. 이것이 바로 WebSockets가 필요한 곳입니다! 오늘은 Go에서 WebSocket을 사용하여 애플리케이션에 실시간...프로그램 작성 2024년 11월 17일에 게시됨

실시간 통신을 위해 Go에서 WebSocket 사용채팅 애플리케이션, 실시간 알림, 협업 도구 등 실시간 업데이트가 필요한 앱을 구축하려면 기존 HTTP보다 더 빠르고 대화형인 통신 방법이 필요합니다. 이것이 바로 WebSockets가 필요한 곳입니다! 오늘은 Go에서 WebSocket을 사용하여 애플리케이션에 실시간...프로그램 작성 2024년 11월 17일에 게시됨 -

MySQL을 사용하여 오늘 생일을 가진 사용자를 어떻게 찾을 수 있습니까?MySQL을 사용하여 오늘 생일이 있는 사용자를 식별하는 방법MySQL을 사용하여 오늘이 사용자의 생일인지 확인하려면 생일이 일치하는 모든 행을 찾는 것이 필요합니다. 오늘 날짜. 이는 UNIX 타임스탬프로 저장된 생일을 오늘 날짜와 비교하는 간단한 MySQL 쿼리를 ...프로그램 작성 2024년 11월 17일에 게시됨

MySQL을 사용하여 오늘 생일을 가진 사용자를 어떻게 찾을 수 있습니까?MySQL을 사용하여 오늘 생일이 있는 사용자를 식별하는 방법MySQL을 사용하여 오늘이 사용자의 생일인지 확인하려면 생일이 일치하는 모든 행을 찾는 것이 필요합니다. 오늘 날짜. 이는 UNIX 타임스탬프로 저장된 생일을 오늘 날짜와 비교하는 간단한 MySQL 쿼리를 ...프로그램 작성 2024년 11월 17일에 게시됨 -

Bootstrap 4 베타의 열 오프셋은 어떻게 되었나요?Bootstrap 4 베타: 열 오프셋 제거 및 복원Bootstrap 4는 베타 1 릴리스에서 열 오프셋 방식에 중요한 변경 사항을 도입했습니다. 열이 오프셋되었습니다. 그러나 후속 베타 2 릴리스에서는 이러한 변경 사항이 취소되었습니다.offset-md-*에서 ml-...프로그램 작성 2024년 11월 17일에 게시됨

Bootstrap 4 베타의 열 오프셋은 어떻게 되었나요?Bootstrap 4 베타: 열 오프셋 제거 및 복원Bootstrap 4는 베타 1 릴리스에서 열 오프셋 방식에 중요한 변경 사항을 도입했습니다. 열이 오프셋되었습니다. 그러나 후속 베타 2 릴리스에서는 이러한 변경 사항이 취소되었습니다.offset-md-*에서 ml-...프로그램 작성 2024년 11월 17일에 게시됨 -

macOS의 Django에서 \"부적절하게 구성됨: MySQLdb 모듈 로드 오류\"를 수정하는 방법은 무엇입니까?MySQL이 잘못 구성됨: 상대 경로 문제Django에서 python prepare.py runserver를 실행할 때 다음 오류가 발생할 수 있습니다:ImproperlyConfigured: Error loading MySQLdb module: dlopen(/Libra...프로그램 작성 2024년 11월 17일에 게시됨

macOS의 Django에서 \"부적절하게 구성됨: MySQLdb 모듈 로드 오류\"를 수정하는 방법은 무엇입니까?MySQL이 잘못 구성됨: 상대 경로 문제Django에서 python prepare.py runserver를 실행할 때 다음 오류가 발생할 수 있습니다:ImproperlyConfigured: Error loading MySQLdb module: dlopen(/Libra...프로그램 작성 2024년 11월 17일에 게시됨 -

Numpy 치트 시트Comprehensive Guide to NumPy: The Ultimate Cheat Sheet NumPy (Numerical Python) is a fundamental library for scientific computing in Python. ...프로그램 작성 2024년 11월 17일에 게시됨

Numpy 치트 시트Comprehensive Guide to NumPy: The Ultimate Cheat Sheet NumPy (Numerical Python) is a fundamental library for scientific computing in Python. ...프로그램 작성 2024년 11월 17일에 게시됨 -

`if` 문 너머: 명시적 `bool` 변환이 있는 유형을 형변환 없이 사용할 수 있는 다른 곳은 어디입니까?형변환 없이 허용되는 bool로의 상황별 변환귀하의 클래스는 bool로의 명시적인 변환을 정의하여 해당 인스턴스 't'를 조건문에서 직접 사용할 수 있도록 합니다. 그러나 이 명시적 변환은 다음과 같은 질문을 제기합니다. 캐스트 없이 't'...프로그램 작성 2024년 11월 17일에 게시됨

`if` 문 너머: 명시적 `bool` 변환이 있는 유형을 형변환 없이 사용할 수 있는 다른 곳은 어디입니까?형변환 없이 허용되는 bool로의 상황별 변환귀하의 클래스는 bool로의 명시적인 변환을 정의하여 해당 인스턴스 't'를 조건문에서 직접 사용할 수 있도록 합니다. 그러나 이 명시적 변환은 다음과 같은 질문을 제기합니다. 캐스트 없이 't'...프로그램 작성 2024년 11월 17일에 게시됨 -

전문가처럼 기술 기사를 읽어야 합니다.빠르게 변화하는 기술 세계에서는 읽은 모든 내용이 정확하거나 편견이 없는 것은 아닙니다. 당신이 읽는 모든 것이 인간이 쓴 것은 아닙니다! 세부 사항이 미묘하게 잘못되었거나 기사가 의도적으로 오해를 불러일으킬 수 있습니다. 기술 기사 또는 미디어 콘텐츠를 소비하는 데...프로그램 작성 2024년 11월 17일에 게시됨

전문가처럼 기술 기사를 읽어야 합니다.빠르게 변화하는 기술 세계에서는 읽은 모든 내용이 정확하거나 편견이 없는 것은 아닙니다. 당신이 읽는 모든 것이 인간이 쓴 것은 아닙니다! 세부 사항이 미묘하게 잘못되었거나 기사가 의도적으로 오해를 불러일으킬 수 있습니다. 기술 기사 또는 미디어 콘텐츠를 소비하는 데...프로그램 작성 2024년 11월 17일에 게시됨 -

하나의 다차원 배열에는 있지만 다른 배열에는 없는 행을 찾는 방법은 무엇입니까?다차원 배열의 연관 행 비교$pageids와 $parentpage라는 두 개의 다차원 배열이 있습니다. 여기서 각 행은 열이 있는 레코드를 나타냅니다. 'id', 'linklabel', 'url'입니다. $pageids에는 있...프로그램 작성 2024년 11월 17일에 게시됨

하나의 다차원 배열에는 있지만 다른 배열에는 없는 행을 찾는 방법은 무엇입니까?다차원 배열의 연관 행 비교$pageids와 $parentpage라는 두 개의 다차원 배열이 있습니다. 여기서 각 행은 열이 있는 레코드를 나타냅니다. 'id', 'linklabel', 'url'입니다. $pageids에는 있...프로그램 작성 2024년 11월 17일에 게시됨 -

Windows에서 \"Java가 인식되지 않습니다\" 오류가 발생하는 이유와 해결 방법은 무엇입니까?Windows에서 "Java가 인식되지 않습니다" 오류 해결Windows 7에서 Java 버전을 확인하려고 하면 "'Java'가 인식되지 않습니다"라는 오류가 발생할 수 있습니다. 내부 또는 외부 명령으로." ...프로그램 작성 2024년 11월 17일에 게시됨

Windows에서 \"Java가 인식되지 않습니다\" 오류가 발생하는 이유와 해결 방법은 무엇입니까?Windows에서 "Java가 인식되지 않습니다" 오류 해결Windows 7에서 Java 버전을 확인하려고 하면 "'Java'가 인식되지 않습니다"라는 오류가 발생할 수 있습니다. 내부 또는 외부 명령으로." ...프로그램 작성 2024년 11월 17일에 게시됨 -

파일 존재 및 권한에도 불구하고 File.delete()가 False를 반환하는 이유는 무엇입니까?File.delete()가 존재 및 권한 확인에도 불구하고 False를 반환함FileOutputStream을 사용하여 파일을 쓴 후 삭제하려고 하면 일부 사용자에게 예기치 않은 문제가 발생합니다. file.delete()는 false를 반환합니다. 이는 파일이 존재하고...프로그램 작성 2024년 11월 17일에 게시됨

파일 존재 및 권한에도 불구하고 File.delete()가 False를 반환하는 이유는 무엇입니까?File.delete()가 존재 및 권한 확인에도 불구하고 False를 반환함FileOutputStream을 사용하여 파일을 쓴 후 삭제하려고 하면 일부 사용자에게 예기치 않은 문제가 발생합니다. file.delete()는 false를 반환합니다. 이는 파일이 존재하고...프로그램 작성 2024년 11월 17일에 게시됨 -

Go의 슬라이스에서 중복 피어를 효율적으로 제거하는 방법은 무엇입니까?슬라이스에서 중복 항목 제거"Address" 및 "PeerID"를 사용하여 개체로 표시되는 피어 목록이 포함된 텍스트 파일 제공 속성의 경우 작업은 코드 구성의 "Bootstrap" 슬라이스에서 일치하는 "...프로그램 작성 2024년 11월 17일에 게시됨

Go의 슬라이스에서 중복 피어를 효율적으로 제거하는 방법은 무엇입니까?슬라이스에서 중복 항목 제거"Address" 및 "PeerID"를 사용하여 개체로 표시되는 피어 목록이 포함된 텍스트 파일 제공 속성의 경우 작업은 코드 구성의 "Bootstrap" 슬라이스에서 일치하는 "...프로그램 작성 2024년 11월 17일에 게시됨 -

고유 ID를 유지하고 중복 이름을 처리하면서 PHP에서 두 개의 연관 배열을 어떻게 결합합니까?PHP에서 연관 배열 결합PHP에서는 두 개의 연관 배열을 단일 배열로 결합하는 것이 일반적인 작업입니다. 다음 요청을 고려하십시오.문제 설명:제공된 코드는 두 개의 연관 배열 $array1 및 $array2를 정의합니다. 목표는 두 배열의 모든 키-값 쌍을 통합하는 ...프로그램 작성 2024년 11월 17일에 게시됨

고유 ID를 유지하고 중복 이름을 처리하면서 PHP에서 두 개의 연관 배열을 어떻게 결합합니까?PHP에서 연관 배열 결합PHP에서는 두 개의 연관 배열을 단일 배열로 결합하는 것이 일반적인 작업입니다. 다음 요청을 고려하십시오.문제 설명:제공된 코드는 두 개의 연관 배열 $array1 및 $array2를 정의합니다. 목표는 두 배열의 모든 키-값 쌍을 통합하는 ...프로그램 작성 2024년 11월 17일에 게시됨 -

Bootstrap 4\의 파일 입력 구성 요소를 사용자 정의하는 방법은 무엇입니까?Bootstrap 4의 파일 입력 제한 사항 해결Bootstrap 4는 사용자가 파일을 쉽게 선택할 수 있도록 사용자 정의 파일 입력 구성 요소를 제공합니다. 그러나 "파일 선택..." 자리 표시자 텍스트를 사용자 정의하거나 선택한 파일 이름을 표시하...프로그램 작성 2024년 11월 17일에 게시됨

Bootstrap 4\의 파일 입력 구성 요소를 사용자 정의하는 방법은 무엇입니까?Bootstrap 4의 파일 입력 제한 사항 해결Bootstrap 4는 사용자가 파일을 쉽게 선택할 수 있도록 사용자 정의 파일 입력 구성 요소를 제공합니다. 그러나 "파일 선택..." 자리 표시자 텍스트를 사용자 정의하거나 선택한 파일 이름을 표시하...프로그램 작성 2024년 11월 17일에 게시됨 -

CSS 상자에 기울어진 모서리를 만드는 방법은 무엇입니까?CSS 상자에 기울어진 모서리 만들기CSS 상자에 기울어진 모서리를 만드는 것은 다양한 방법을 사용하여 수행할 수 있습니다. 한 가지 접근 방식은 아래에 설명되어 있습니다.테두리를 사용하는 방법이 기술은 컨테이너 왼쪽을 따라 투명한 테두리를 만들고 아래쪽을 따라 기울어...프로그램 작성 2024년 11월 17일에 게시됨

CSS 상자에 기울어진 모서리를 만드는 방법은 무엇입니까?CSS 상자에 기울어진 모서리 만들기CSS 상자에 기울어진 모서리를 만드는 것은 다양한 방법을 사용하여 수행할 수 있습니다. 한 가지 접근 방식은 아래에 설명되어 있습니다.테두리를 사용하는 방법이 기술은 컨테이너 왼쪽을 따라 투명한 테두리를 만들고 아래쪽을 따라 기울어...프로그램 작성 2024년 11월 17일에 게시됨 -

Pandas DataFrame의 문자열에 선행 0을 어떻게 추가할 수 있나요?Pandas Dataframe의 문자열에 선행 0 추가Pandas에서 문자열 작업을 수행하려면 형식을 수정해야 하는 경우가 있습니다. 일반적인 작업은 데이터 프레임의 문자열에 선행 0을 추가하는 것입니다. 이는 ID나 날짜와 같이 문자열 형식으로 변환해야 하는 숫자 데...프로그램 작성 2024년 11월 17일에 게시됨

Pandas DataFrame의 문자열에 선행 0을 어떻게 추가할 수 있나요?Pandas Dataframe의 문자열에 선행 0 추가Pandas에서 문자열 작업을 수행하려면 형식을 수정해야 하는 경우가 있습니다. 일반적인 작업은 데이터 프레임의 문자열에 선행 0을 추가하는 것입니다. 이는 ID나 날짜와 같이 문자열 형식으로 변환해야 하는 숫자 데...프로그램 작성 2024년 11월 17일에 게시됨

중국어 공부

- 1 "걷다"를 중국어로 어떻게 말하나요? 走路 중국어 발음, 走路 중국어 학습

- 2 "비행기를 타다"를 중국어로 어떻게 말하나요? 坐飞机 중국어 발음, 坐飞机 중국어 학습

- 3 "기차를 타다"를 중국어로 어떻게 말하나요? 坐火车 중국어 발음, 坐火车 중국어 학습

- 4 "버스를 타다"를 중국어로 어떻게 말하나요? 坐车 중국어 발음, 坐车 중국어 학습

- 5 운전을 중국어로 어떻게 말하나요? 开车 중국어 발음, 开车 중국어 학습

- 6 수영을 중국어로 뭐라고 하나요? 游泳 중국어 발음, 游泳 중국어 학습

- 7 자전거를 타다 중국어로 뭐라고 하나요? 骑自行车 중국어 발음, 骑自行车 중국어 학습

- 8 중국어로 안녕하세요를 어떻게 말해요? 你好중국어 발음, 你好중국어 학습

- 9 감사합니다를 중국어로 어떻게 말하나요? 谢谢중국어 발음, 谢谢중국어 학습

- 10 How to say goodbye in Chinese? 再见Chinese pronunciation, 再见Chinese learning